NIC offload functions in the Dragonet network stack

Some context

A month ago, we presented a paper at TRIOS about managing NIC queues in Dragonet. Dragonet is a network stack developed at ETH with the primary goal of intelligently managing NIC hardware. NIC queues are just one example of NIC hardware that can be managed by Dragonet. Another example we looked at is offload functions, which is what I’m going to talk about here.

Since I’ve left ETH to join IBM research I’ve been focusing on other things so I wanted to write some things down before I forget them.

For background information, please check the Dragonet HotOS paper that describes the motivation, as well as the PLOS and TRIOS papers.

Keep in mind that the ideas presented here are not fully fleshed out. Our discussion involves our models, and has not been fully implemented in the execution of Dragonet (even though most of the pieces are there). Furthermore, we deal with the (relatively simple) case of checksum offloads. We suspect that our approach holds for more complicated scenarios (e.g., offloading protocols like IPSec), but we have not verified it yet.

Motivation

Network interface cards (NICs) offer offload functions for executing parts of protocol processing that are traditionally done in software. Offload functions range from checksum computation to full protocols (e.g., IPSec offload or TCP offload engines).

In most current network stacks, support for these functions is usually

inlined in the protocol implementation. For example, Linux’s

udp_send_skb() function contains the following code:

...

else if (sk->sk_no_check_tx) { /* UDP csum disabled */

skb->ip_summed = CHECKSUM_NONE;

goto send;

} else if (skb->ip_summed == CHECKSUM_PARTIAL) { /* UDP hardware csum */

udp4_hwcsum(skb, fl4->saddr, fl4->daddr);

goto send;

} else

csum = udp_csum(skb);

...

Arguably, this is might not be too bad, but if we want to support additional (and more intricate) NIC offload functions, code complexity will increase.

As we see it, the fundamental problem is that the code deals with two separate concerns:

- The implementation of the network protocols

- Dealing with the NIC offload functions (or NIC hardware in general)

In Dragonet, we decouple these two concerns.

One of our goals for building Dragonet was to enable reasoning about NIC offload functions, and allow the network stack to automatically use them.

Dragonet

In Dragonet, we model the NIC and the network stack as dataflow graphs.

The network stack graph describes the processing (or construction) of incoming (or outgoing) packets. We call it the Logical Protocol Graph (LPG). The LPG contains both static components, such as the computation of the UDP checksum for all UDP packets being sent, as well as dynamic components, such as the steering of incoming packets to applications that have binded a socket.

The NIC graph is called the Physical Resource Graph (PRG). The PRG describes the capabilities of the NIC, along with its configuration, i.e., how different configuration options affect the behavior of the NIC. For this post, I will assume that the NIC is already configured to simplify the discussion.

LPG: Modeling the network stack

The graphs for real protocols (even simple ones like UDP) can get quite complicated, so I’ll use a mock protocol stack with two protocols called I and P. Protocol I offers a ping-pong facility (think of ICMP echo request/reply), while P has two fields: one containing a packet checksum, and one containing a port for steering packets to different applications. I’ve ignored many things (e.g., the network layer does not have addresses), but we have applied the same ideas on Dragonet’s UDP/IP stack.

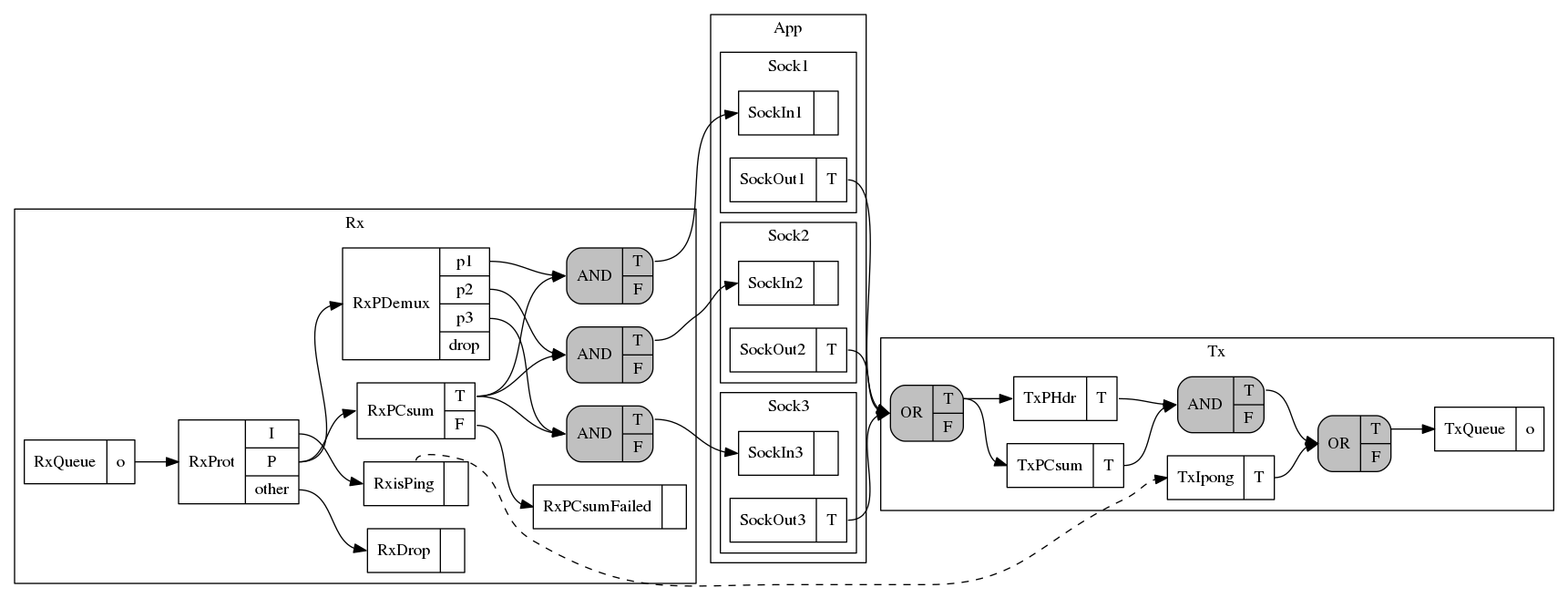

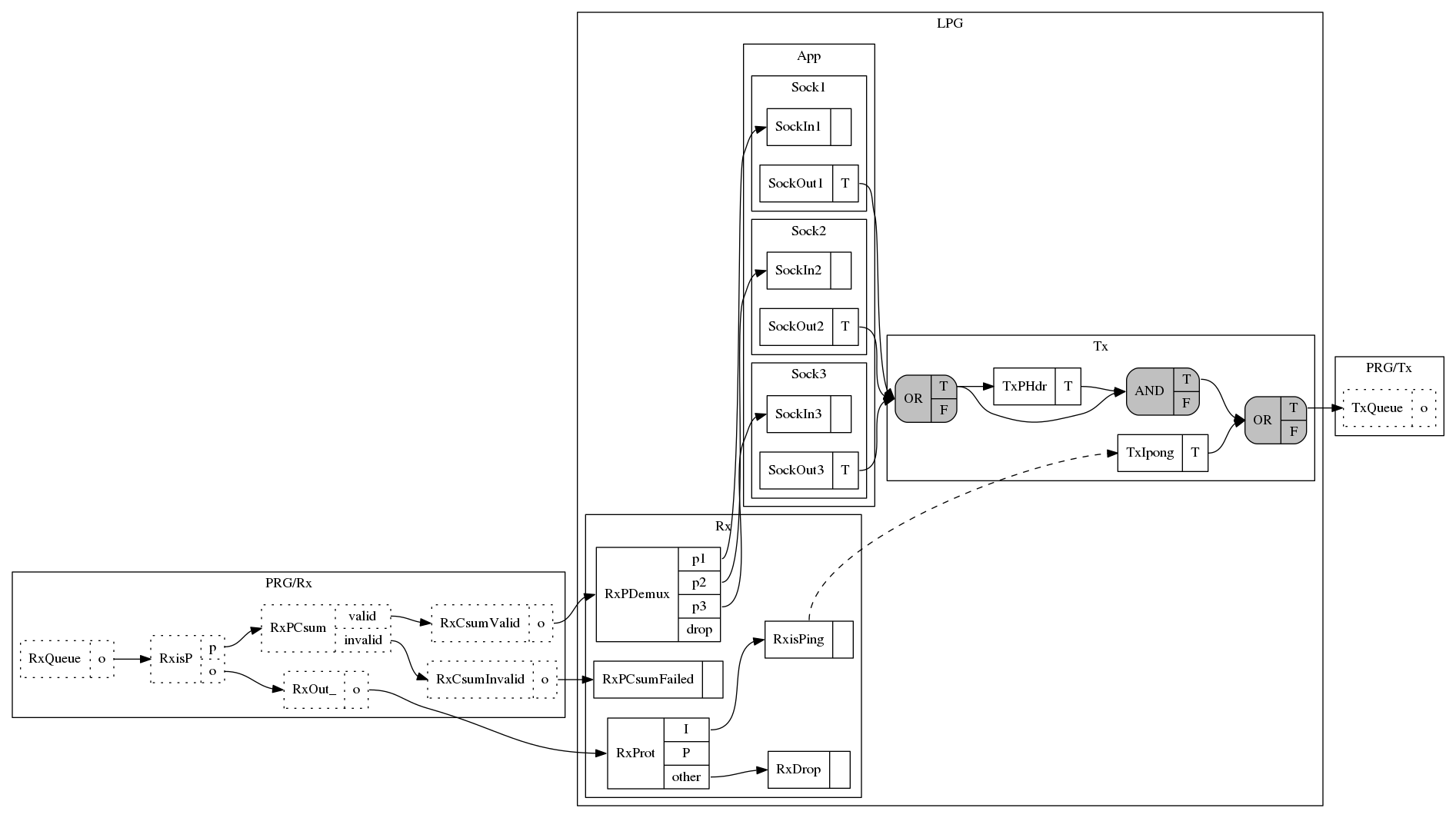

The LPG for the I/P stack is shown below.

The graph is organized in three clusters:

- Rx: the receive side of the network stack

- Tx: the send side of the network stack

- App: applications.

The graph layout (automatically generated by dot) might look somewhat strange

because the network stack is split into two parts with applications in-between.

Applications communicate with the stack via a socket containing two queues: one

for receiving packets (e.g., SockIn1) and one for sending packets (e.g.,

SockOut1).

Nodes with gray background are logical operators, while nodes with white

background perform protocol-specific computations. We call the latter

Function nodes or F-nodes. For example, the RxPCsum checks the checksum

of the packet and enables the true port (T) if the checksum was correct, or

the false port (F) otherwise.

Packets from the NIC arrive at the receive queue (RxQueue). P packets

are steered to the proper socket queue based on their port if the

checksum is valid. I packets that request a ping generate a pong packet

to be send. The latter is shown with a dashed edge on the graph. We call

these edges spawn edges because they denote that it is possible for one

node (in this case RxisPing) to “spawn” a task to another node

(TxIpong). As far as the core dataflow model, however, the RxisPing

node is terminal. You can find more details about the model and spawn

edges at Antoine Kaufmann’s Msc thesis.

For sending packets, applications enqueue packets in their out socket

queue. Subsequently, the network stack builds the header (TxPHdr),

calculates the checksum (TxPCsum) and forwards the packet to the NIC.

PRG: Modeling the NIC

NICs are modeled using the same abstractions. In the simplest case,

a NIC would modeled as a pair of TxQueue, RxQueue nodes for sending

and receiving packets.

Here, we are interested in offload functions, so we will consider the most frequently used ones: checksum offload. We will model our NIC to resemble the Intel 10GiB i82599, which is what we use for our UDP/IP models.

We show the NIC model graphs in the subsequent paragraphs where we discuss offloading.

Offload on the send path (Tx)

We start with the send side. In the i82599, you can configure transmit contexts (two per queue) that (among other things) specify the offload functions that will be applied on packets. In these contexts you need to specify things like the L4 packet type (TCP or UDP) and various header lengths, so that the NIC can calculate the checksum. When pushing a packet to the NIC for transmission, you specify a context in the transmit descriptor.

Probably the easiest way to support these offload functions (and what the Linux driver seems to do) is to set up a context for each packet before pushing the packet to the NIC.

Returning to our mock protocol, let’s assume our (imaginary) NIC can compute the P checksum and place it in the appropriate field in the packet. To do that, we need to appropriately set the context for P packets.

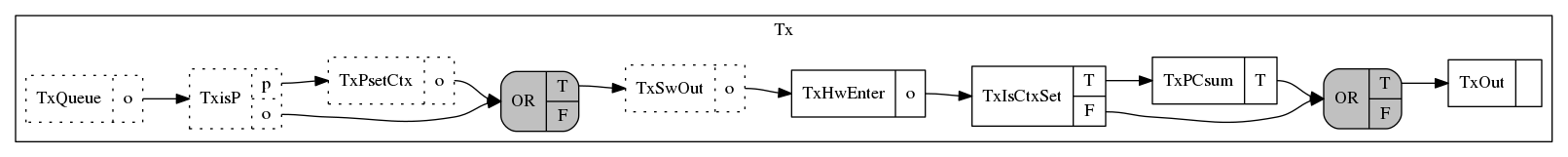

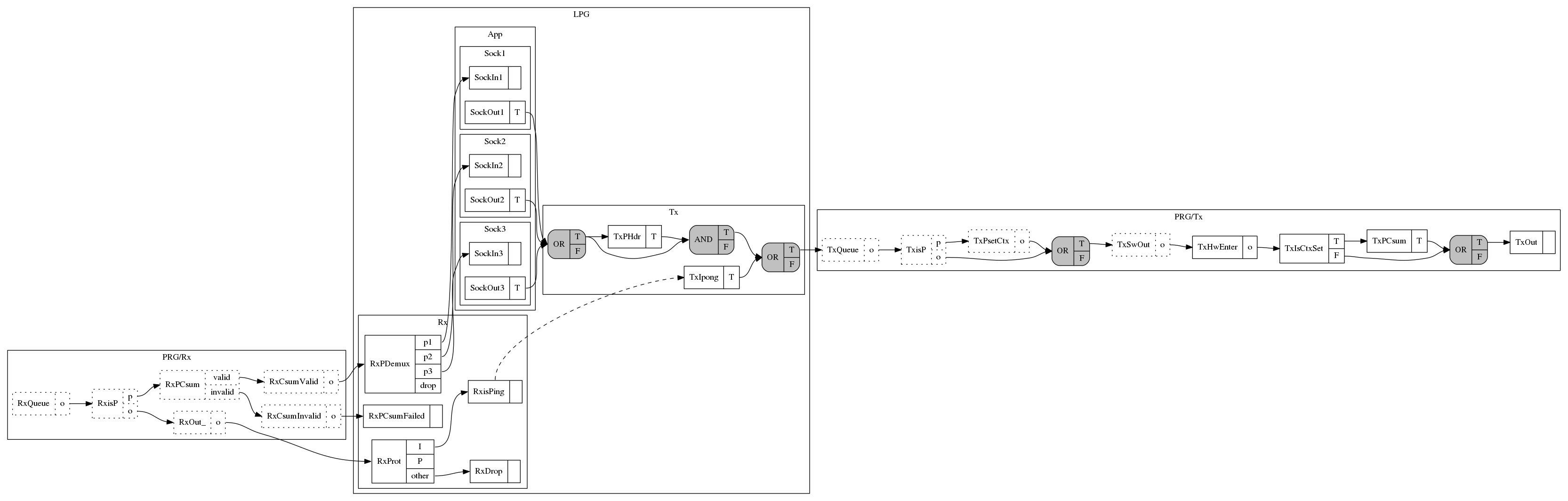

Conceptually, this NIC-specific software functionality (e.g., setting the context) is part of the NIC driver, and cannot be placed in the LPG which is NIC-agnostic. Instead, we place NIC-specific software functionality in the PRG with special F-nodes that are tagged for being implemented in software rather than in hardware (software nodes). The send side of our NIC’s PRG is shown below:

The PRG is split into two parts: software (nodes with dotted lines) and

hardware. The TxPsetCtx node, for example, is a software node responsible for

setting the appropriate NIC context before sending the packet.

Dragonet operates by connecting the LPG and the PRG, an operation we call

embedding. As we connect the LPG and the PRG (think of collapsing the

TxQueue and RxQueue nodes of the PRG and the LPG), we perform a number of

optimizations to the software nodes. One of these optimizations is removing

unneeded functionality in the LPG. In this case, since the computation of the

P checksum (TxPCsum) will happen in the NIC, it is unnecessary to perform it

in software.

How to decide, however, which functionality can be offloaded to the NIC and omitted from software execution? For Dragonet to answer this (and other) questions we use boolean logic to reason about the packets that enter and leave the Dragonet graphs.

To do that, we annotate F-node ports with predicates. This allows us to build

boolean expressions about the state of network packets that traverse the graph.

To give you an example, If I query the predicate of the TxSwOut node, i.e.,

what are the properties of the packets that will arrive at this node, I get

(this is from ghci, since the Dragonet models are implemented in Haskell):

*Main> prPred prg "TxSwOut"

TxSwOut:

OR

AND

pred(TxQueue,out)

not(pred(prot,p))

AND

pred(TxPsetCtx,o)

pred(TxQueue,out)

pred(prot,p)

The expression is in DNF and is build by looking at the previous nodes in the graph.

We can use these boolean expressions to decide whether we can offload a node on

the send path. We first compute the expression at the output of the embedded

graph (TxOut). Then we remove (short-circuit) the node we want to offload and

re-compute the expression. If the two expressions are equivalent, then the node

can be safely offloaded.

The send side (Tx) of the PRG that we use to model the NIC is shown below, where

the LPG node TxPCsum was offloaded. Note that it is possible to remove the AND

node, but our graph optimizer does not currently support it.

Offload on the receive path (Rx)

Let’s discuss the receive path. The Intel i82599 NIC can offload checksum calculations on received packets, including the IPv4 header checksum and the TCP/UDP checksum. It is worth noting that there are restrictions on the types of packets for which the NIC can do this (e.g., some IPv6 extended headers). The offloading works by the NIC setting a bit on the receive descriptor to indicate whether the checksum is correct or not. The OS can check the bit, instead of calculating the packet checksum, to determine whether the validity of the packet. Note that other NICs may work in different ways.

Let’s assume that our NIC for I/P works in a similar manner. The first approach we tried was replacing the LPG node that does the P checksum (let’s call it S) with a NIC-specific node which would perform the same function by, in this example, checking the bit in the descriptor (let’s call it H).

This is a simple and neat idea, but has some problems. For example, it only works if S and H have exactly the same structure (same ports). Furthermore, it cannot deal with the case that these nodes operate under different assumptions: node S might require that the packet’s protocol is P, while node H might have additional restrictions.

What we want is a way to express all the receive capabilities of the NIC in an open-ended way so that the network stack can exploit them by adjusting the LPG.

In fact, we already do something similar for the NIC’s multiple receive queues. Each receive queue has its own filters, specifying the types of packets (we use our predicate system to represent that) will be received. We expose queues separately, each with their own predicates, and attach a copy of the LPG in each queue. Based on the queue predicate, we optimize the LPG by removing unnecessary parts. For example, all applications that, according to the queue filter predicate, will not receive packets are removed. Furthermore, unnecessary nodes are removed. If, for example, only UDP packets are received, TCP processing can be completely removed from the LPG. This procedure, somewhat similar to a constant propagation compiler optimization phase, is part of our embedding algorithm.

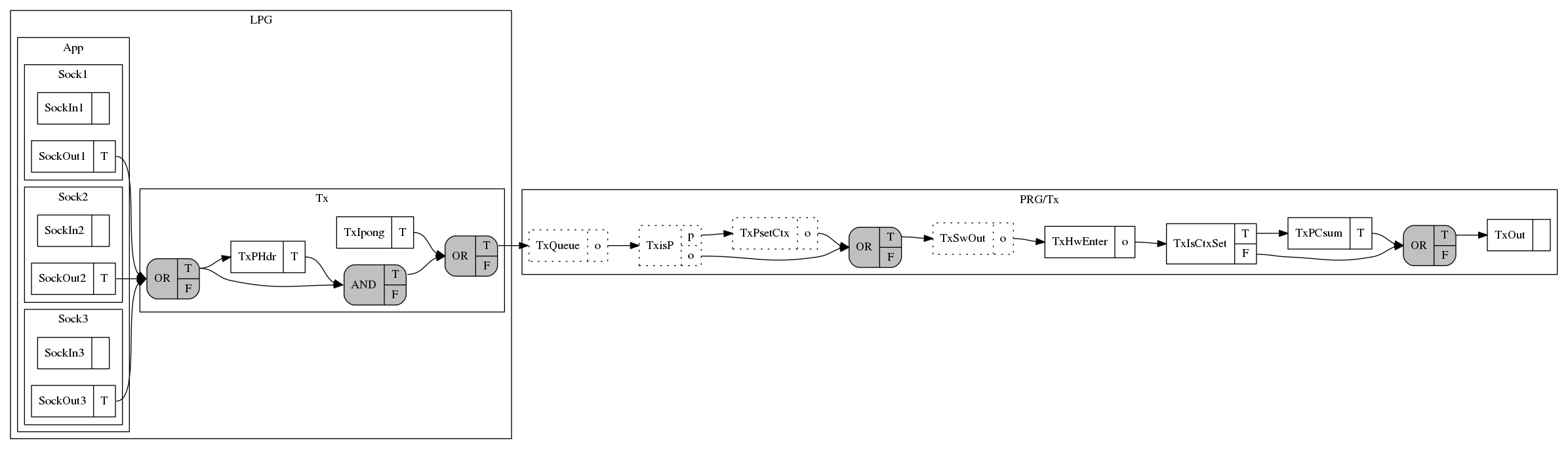

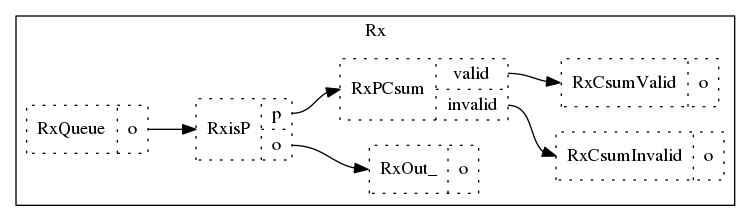

We reuse this functionality to handle receive offload functions, by exposing multiple sink nodes in the PRG graph. The PRG graph for our I/P NIC is shown below:

Note that all the nodes in the graph are software nodes. The packet is fetched from the NIC receive queue, and the driver checks if it is a P packet and if that’s the case it checks the bit in the receive descriptor set by the NIC.

The PRG exports three sink nodes to connect an LPG:

RxOut_: protocol is not PRxCsumValid: protocol is P and P checksum is validRxCsumInvalid: protocol is P and checksum is invalid

During embedding Dragonet will connect a clone of the LPG to each NIC sink node, and perform various optimizations to remove unnecessary nodes, etc. The embedded graph (without most of the send side of the PRG) is shown below:

The graph shows how embedding optimized the LPG clones:

- For the

RxCsumInvalidPRG sink node, the LPG graph was reduced to a single node (RxPCsumFailed). This was done by using the predicates in the node to decide which LPG nodes are going to be used and which ones will not. - For the

RxCsumValidPRG sink node, the LPG was optimized to just demultiplex the packet to the application sockets: no checksum check, and protocol (I or P) check. - For the

RxOut_node, packets still need to be demultiplexed, but because they cannot be P packets, thePport ofRxProtis not connected to anything.

For reference, here is the full embedded graph: